5. Identify the optimal sequence of capabilities to demonstrate or release

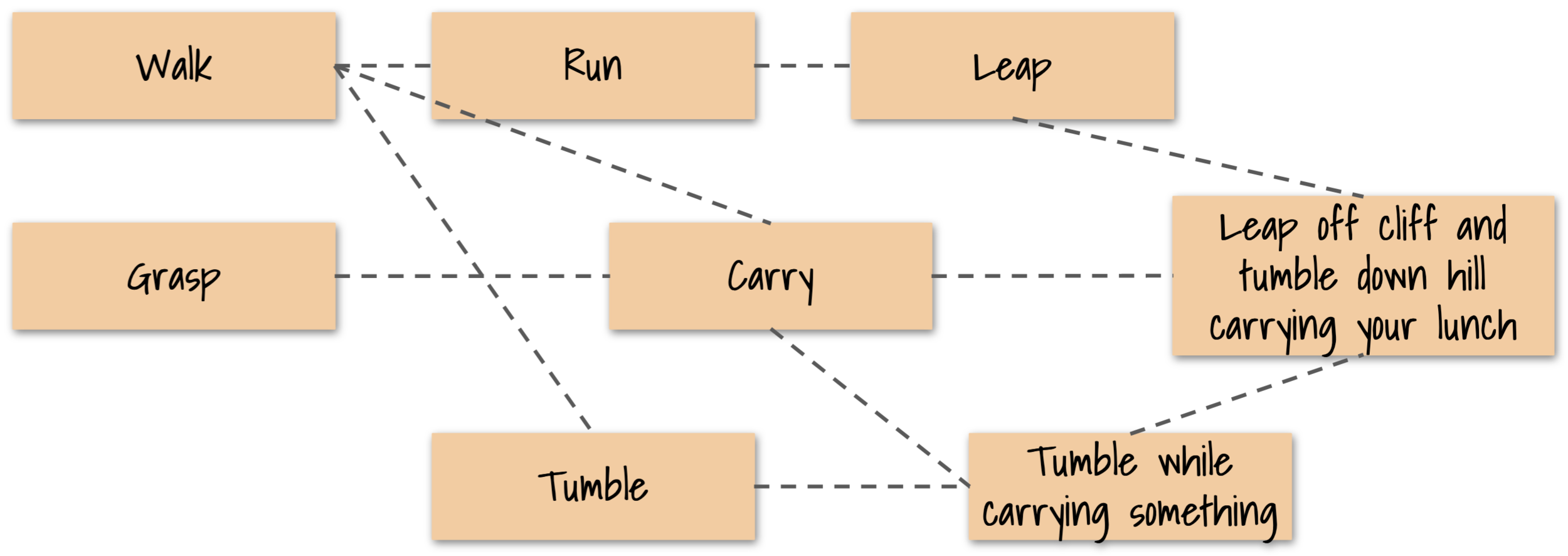

Instead of dividing up the solution into separate pieces that comprise the entire solution, think in terms of the separate capabilities that comprise the idea. Consider our banking application example that enables customers to get financial advice. What are the demonstrable capabilities that comprise that entire idea? If the technical approach includes using an AI-based system, then the capabilities might be something like these:

An AI system that can provide high quality financial advice based on someone’s account data and a set of financial goals chosen from a predefined set of goals.

Web service that can access the AI system.

Web app that can access the Web service.

Mobile app that can access the Web service.

Each of these is a discrete capability that can be built and demonstrated. The order in which these should be created depends on many things. One factor is risk: if item 1 cannot be achieved, then the other items should not even be started; so the first step is to demonstrate item 1 as proof-of-concept for item 1. We’ll call that capability 1a.

If item 1a is demonstrated sufficiently to give confidence, then work might start on the other capabilities. Each of those can be developed by itself, even though item 2 interacts with item 1, and items 3 and 4 interact with item 2. This parallel work is possible if the people working on each capability collaborate to define interfaces, and if they define strategies for synchronizing their work whenever an interface needs to change. That should ideally include the creation of an automated integration test system that is run frequently.

Also – crucially – these separate components should not be developed in isolation. Separating like this is necessary from a technical design standpoint, and it makes them each demonstrable to show progress; but the overall system progress should also be demonstrated as early as possible. Let’s talk about how to track progress.

Publish Metrics

Now that you have the capabilities defined, it is time to start identifying leading and trailing metrics to tell you how things are going. Each technical capability tends to have a very small number of key performance metrics—often only one—that reveal how well it is working. For an AI system it might be the accuracy of the results compared to desired results. For a Web service it might be the number of features that have been completed—the portion of the API that has been implemented: agilists call this the feature “burn down”. For a Web or mobile application it might be the burn down for user-facing features.

The set of metrics that matter changes over time. The metrics that you focus on should always reflect what you believe your current constraint is.

An important point is that the set of metrics that matter changes over time. The Theory of Constraints explains this to us: once one constraint is removed, there is another that holds us back from improving. So the metric that you choose to focus on should always reflect what you believe your current constraint is.

Performance metrics are trailing metrics: they tell you what your outcomes are for a process. Ultimately we want performance metrics for how the product does in the marketplace, but right now we are talking about building enough product features to justify getting the product into market testing.

There also need to be leading metrics. They tell you whether you are using your chosen development methods well. Test coverage is a leading metric: it predicts the product quality.

See the subtopic Defining Dashboards.

Systematize the way you did it

Unless your product is a “one-and-done” effort, which is increasingly rare today given that your competitor’s products are continuously improving, you need to turn the process that you used to define the capabilities into a repeatable process.

Who was involved in defining the capabilities? How well did it go? What should you do differently? As the design of the system evolves, are additional capabilities needed? And should you continue to improve each capability – defining new and increasingly aggressive metrics for it?

One issue that arises is the prioritization of the capabilities: if two capabilities are relatively independent of each other, which should you do first? There are many factors that go into such a decision. Some are listed on the right.

Rather than trying to create a formula to take all these largely subjective things into account, we recommend discussing these factors and making a judgment call.*

We also do not recommend a formal process for prioritization. Prioritization needs to be fluid: things change, and those leading an initiative need to be constantly watching key metrics and how well things are going, and be ready to reprioritize at any time. If you try to make this process mechanical or overly analytical, you lose the benefit of the human mind’s incredible ability to generate robust predictions.

People should all know what the priorities are. If they don’t, that is a problem and it indicates that leadership is not being participative (read about participative leadership in Path-Goal Theory) and sharing what their priorities are. If people need to look at an “Agile tool” to find out what the current prioritized capabilities are, then they are not paying attention to the right things.

* One of us actually wrote a textbook that teaches you how to analyze these decisions mathematically, using statistics, “real options” analysis, and simulation, but frankly it is not usually worth the effort unless the capability in question is very large, like a power plant.)

Subtopics

Coming

Some factors to consider when prioritizing a capability:

Is the capability important for the next release of the product?

Do capabilities that are important for the next product release depend on this capability?

Is the capability technically risky?—for example, have your teams used the required technologies before?

How certain are you that the capability is actually wanted or needed by the users?

How much effort and time are required to create the capability?

Organizational Learning That Occurs In This Step

How to sequence and prioritize the capabilities that must be created to achieve the initiative’s goal and realize the most value.

Quick Links

Identify the optimal sequence of capabilities to demonstrate or release

Identify key intersection points, critical paths, and integration strategies

Decompose each capability into a set of features to be concurrently developed

Start test marketing them as MVPs are produced, and feed results back to adjust visions